39 tf dataset get labels

Does validation_split in tf.keras.preprocessing.image_dataset_from ... For a binary image classification problem (CNN using tf.keras). My image data is separated into folders (train, validation, test) each with subfolders for two balanced classes. ... Specifying class or sample weights in Keras for one-hot encoded labels in a TF Dataset. 1. Why is val accuracy 100% within 2 epochs and incorrectly predicting new ... tf.data - get labels from shuffled dataset without shuffle for images, labels in val_ds: preds = model.predict (images) acc_sc = acc (labels, tf.argmax (preds, axis=1)) print (acc_sc) break # or accumulate and average score from all batches. But probably a better way is exist? yes, but you can calculate accuracy from each batch and average it.

How can I use tf.data.experimental.make_csv_dataset to make a dataset ... column_names = ['Label','Sentence'] batchsize = 32 label = column_names[0] train_dataset = tf.data.experimental.make_csv_dataset( 'datasettrain.csv', batchsize, column_names = column_names, label_name = label, num_epochs=1 ) Due to this the batches being ordered dicts don't allow me to do certain things which a dataset directly loaded from tfds ...

Tf dataset get labels

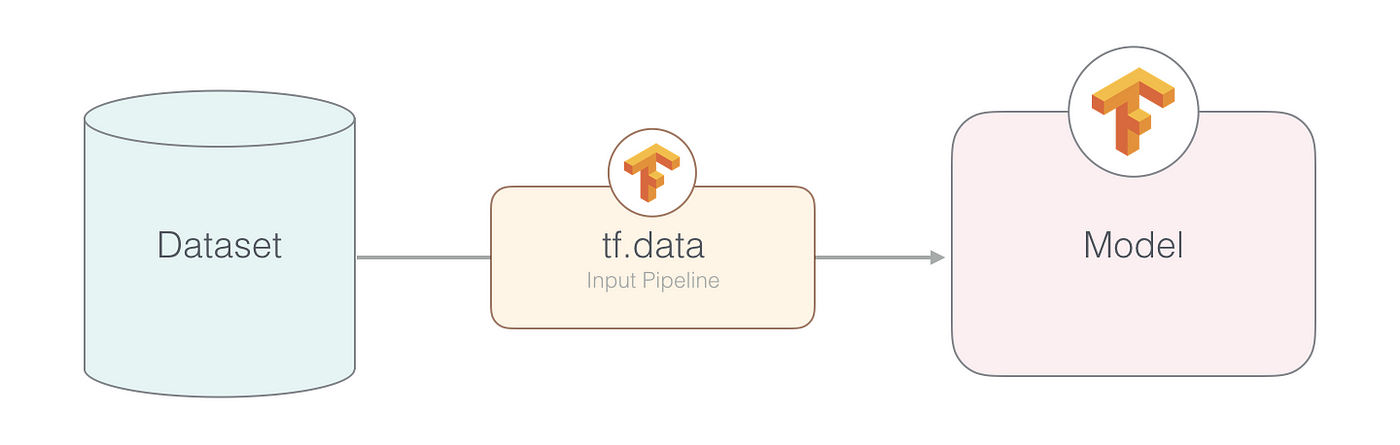

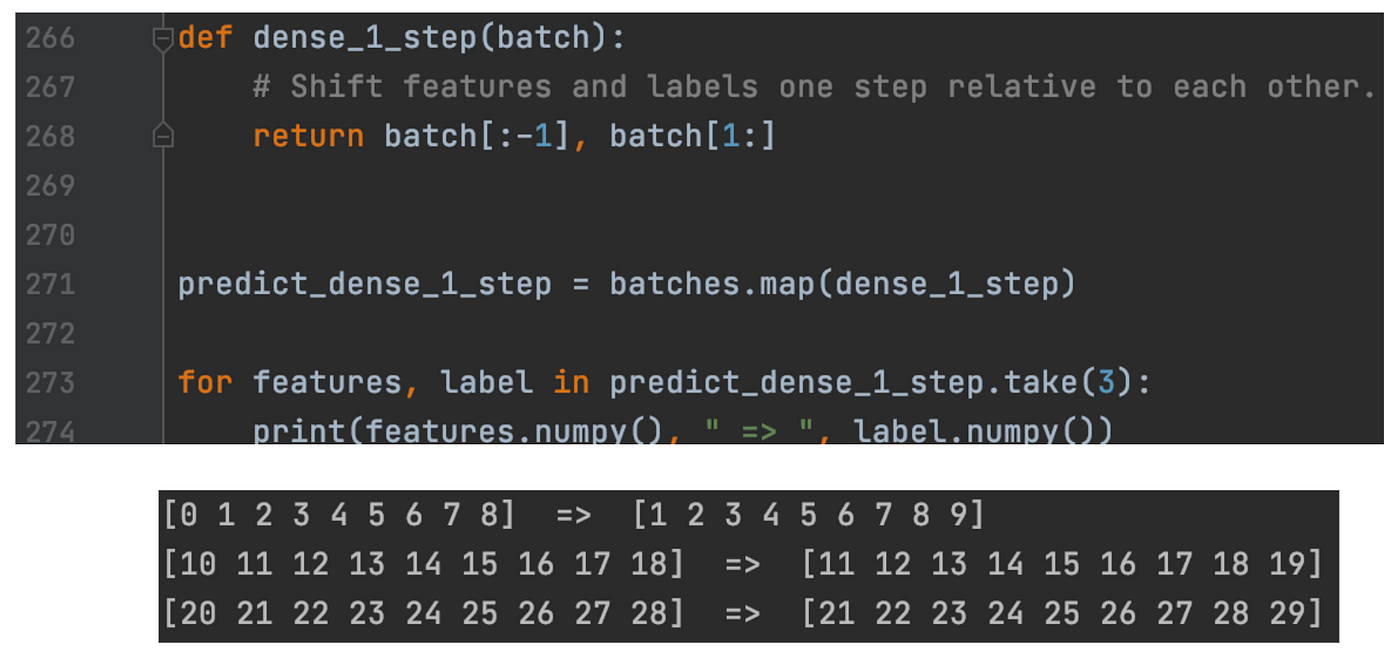

Text Classification with BERT Tokenizer and TF 2.0 in Python - Stack Abuse processed_dataset = tf.data.Dataset.from_generator ( lambda: sorted_reviews_labels, output_types= (tf.int32, tf.int32)) Finally, we can now pad our dataset for each batch. The batch size we are going to use is 32 which means that after processing 32 reviews, the weights of the neural network will be updated. How to implement tf.data.Dataset.window into_get_serve_tf_examples_fn ... def _input_fn (file_pattern, tf_transform_output, num_epochs = None, batch_size = BATCH_SIZE) -> tf. data. Dataset: '''Create batches of features and labels from TF Records Args: file_pattern - List of files or patterns of file paths containing Example records. tf_transform_output - transform output graph num_epochs - Integer specifying the number of times to read through the dataset. A Gentle Introduction to the tensorflow.data API - Machine Learning Mastery Yet another way of providing data is to use tf.data dataset. In this tutorial, you will see how you can use the tf.data dataset for a Keras model. After finishing this tutorial, you will learn: How to create and use the tf.data dataset; The benefit of doing so compared to a generator function; Let's get started.

Tf dataset get labels. Dataset object has no attribute `to_tf_dataset` · Issue #3304 ... - GitHub Dataset object has no attribute `to_tf_dataset` · Issue #3304 · huggingface/datasets · GitHub. Notifications. Fork 1.8k. 14.2k. Code. Issues 462. Pull requests 100. Discussions. Actions. How to apply KNN algorithm using tf - ProjectPro Recipe Objective. Step 1 - Import library. Step 2 - Load the dataset. Step 3 - Perform one hot encoding. Step 4 - Normalize the data. Step 5 - Split the data in train and test. Step 6 - Define features. Step 7 - Define manhattan distance and nearest k points. Step 8 - Training and evaluation. tfdf.keras.pd_dataframe_to_tf_dataset - TensorFlow Ensures columns have uniform types. If "label" is provided, separate it as a second channel in the tf.Dataset (as expected by Keras). If "weight" is provided, separate it as a third channel in the tf.Dataset (as expected by Keras). If "task" is provided, ensure the correct dtype of the label. Possible shuffling issue with using `tf.data.Dataset.list_files ... You will get non-deterministic results, unless you set shuffle=False or set a seed as per the docs of tf.data.Dataset.list_files and its the intended behavior. Even though we use the same dataset name twice in tf.data.Dataset.zip((inputs, inputs)), its actually independently iterating and selecting values from them.. I don't see this as a bug or issue to be fixed.

Hugging Face Transformers with Keras: Fine-tune a non-English BERT for ... Fine-tuning the model using Keras. Now that our dataset is processed, we can download the pretrained model and fine-tune it. But before we can do this we need to convert our Hugging Face datasets Dataset into a tf.data.Dataset.For this we will us the .to_tf_dataset method and a data collator for token-classification (Data collators are objects that will form a batch by using a list of dataset ... tf_flowers | TensorFlow Datasets tf_flowers Stay organized with collections Save and categorize content based on your preferences. Visualization : Explore in Know Your Data north_east image_dataset_from_directory: Create a dataset from a directory in ... Then calling image_dataset_from_directory(main_directory, labels='inferred') will return a tf.data.Dataset that yields batches of images from the subdirectories class_a and class_b, together with labels 0 and 1 (0 corresponding to class_a and 1 corresponding to class_b).. Supported image formats: jpeg, png, bmp, gif. Animated gifs are truncated to the first frame. Train your model with custom datasets in TensorFlow The loading process of custom dataset can be divided into the following steps: 1. Get the path of all pictures 2. Get labels and convert them to numbers 3. Read the picture and perform corresponding preprocessing 4. Packing pictures and labels The following will take importing training set (dataset/Training) in TensorFlow as an example.

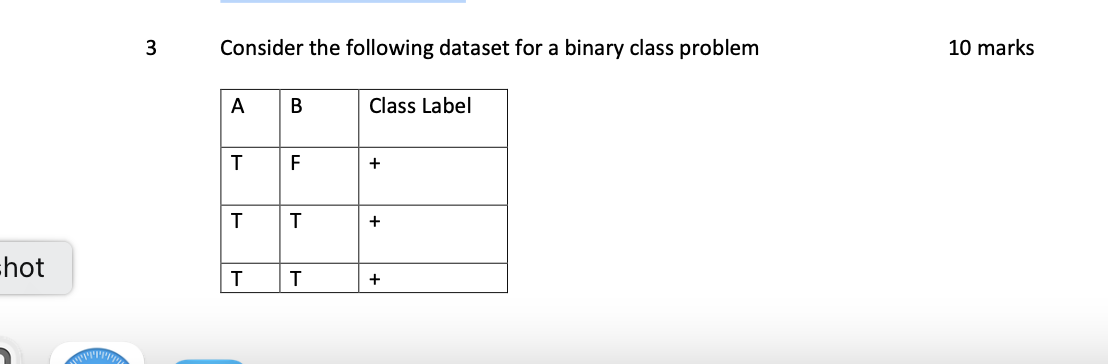

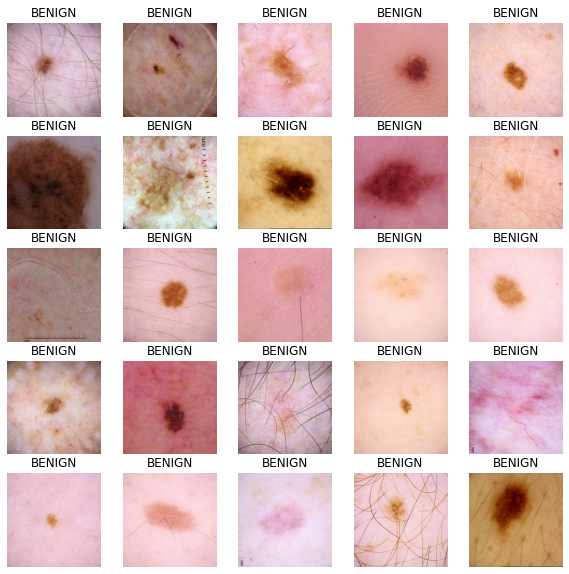

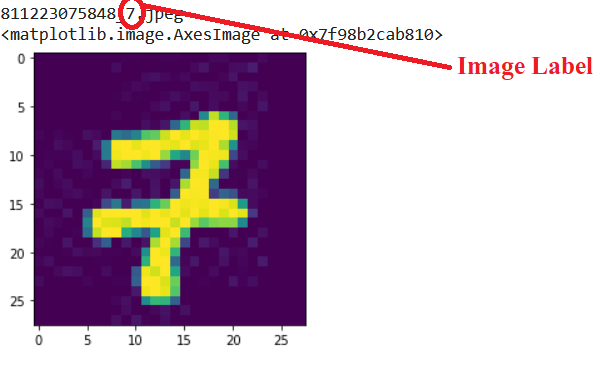

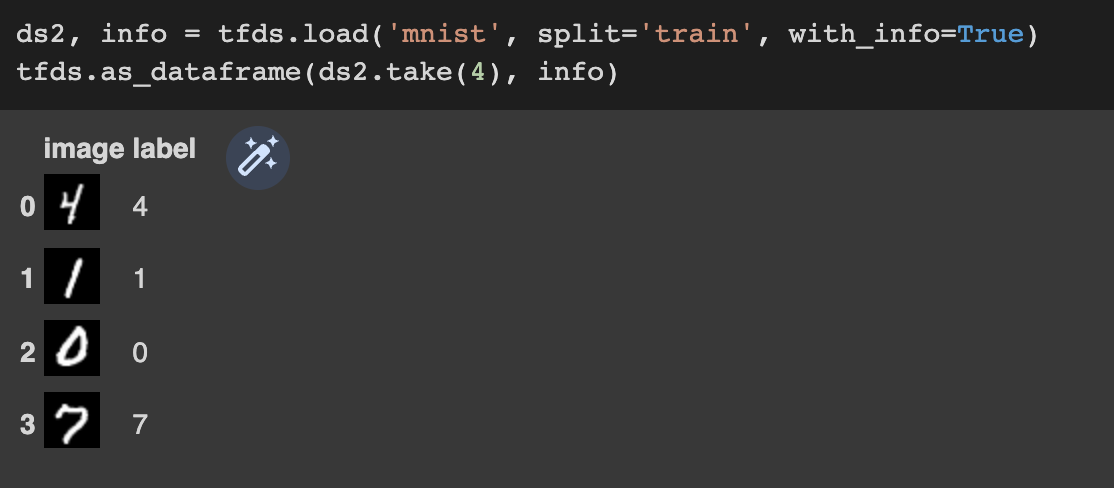

Using tf.keras.utils.image_dataset_from_directory with label list image_dataset_from_directory() takes directories in current path as input labels and then open those files and take the images inside it as data. In your case, it is reading the images/data as classes and trying to open them which is not possible and hence the errors. tfds.visualization.show_examples | TensorFlow Datasets TensorFlow Datasets Fine tuning models for plant disease detection This function is for interactive use (Colab, Jupyter). It displays and return a plot of (rows*columns) images from a tf.data.Dataset. Usage: ds, ds_info = tfds.load('cifar10', split='train', with_info=True) fig = tfds.show_examples(ds, ds_info) Image Augmentation with Keras Preprocessing Layers and tf.image In this tutorial, you will use the citrus leaves images, which is a small dataset of less than 100MB. It can be downloaded from tensorflow_datasets as follows: 1 2 import tensorflow_datasets as tfds ds, meta = tfds.load('citrus_leaves', with_info=True, split='train', shuffle_files=True) Classification with TensorFlow Decision Forests - Keras Prepare the data. This example uses the United States Census Income Dataset provided by the UC Irvine Machine Learning Repository.The task is binary classification to determine whether a person makes over 50K a year. The dataset includes ~300K instances with 41 input features: 7 numerical features and 34 categorical features.

How to Finetune BERT for Text Classification ... - Victor Dibia Assuming we have a dataframe with two columns - text and label , we can use the following steps to create a tf.data.Dataset object that is used to train a keras model. Split dataframe into train and test. python from transformers import AutoModelForSequenceClassification, TFAutoModelForSequenceClassification,TFBertForSequenceClassification

Classify structured data using Keras preprocessing layers Next, create a utility function that converts each training, validation, and test set DataFrame into a tf.data.Dataset, then shuffles and batches the data. Note: If you were working with a very large CSV file (so large that it does not fit into memory), you would use the tf.data API to read it from disk directly.

Tensorflow.js tf.data.Dataset.filter() Function - GeeksforGeeks Tensorflow.js is an open-source library developed by Google for running machine learning models and deep learning neural networks in the browser or node environment. It also helps the developers to develop ML models in JavaScript language and can use ML directly in the browser or in Node.js. The tf.data.Dataset.filter () function is used to ...

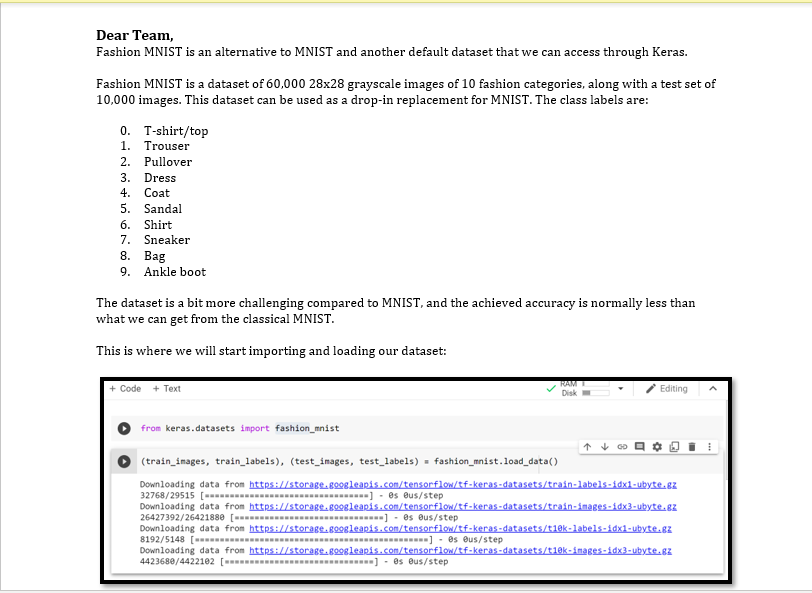

Custom training with tf.distribute.Strategy | TensorFlow Core Download the Fashion MNIST dataset fashion_mnist = tf.keras.datasets.fashion_mnist (train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data() # Add a dimension to the array -> new shape == (28, 28, 1) # This is done because the first layer in our model is a convolutional

dataset_prefetch: Creates a Dataset that prefetches elements from this ... all_nominal: Find all nominal variables. all_numeric: Speciy all numeric variables. as_array_iterator: Convert tf_dataset to an iterator that yields R arrays. as_tf_dataset: Add the tf_dataset class to a dataset choose_from_datasets: Creates a dataset that deterministically chooses elements... dataset_batch: Combines consecutive elements of this dataset into batches.

How to get the label distribution of a `tf.data.Dataset` efficiently? The naive option is to use something like this: import tensorflow as tf import numpy as np import collections num_classes = 2 num_samples = 10000 data_np = np.random.choice(num_classes, num_samples) y = collections.defaultdict(int) for i in dataset: cls, _ = i y[cls.numpy()] += 1

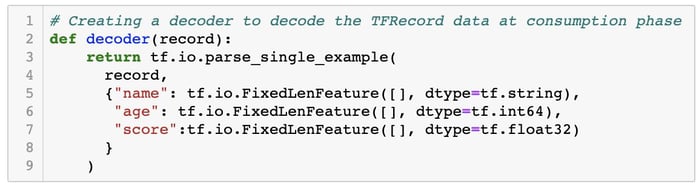

TPU-speed data pipelines: tf.data.Dataset and TFRecords The goal is to learn to categorize them into 5 flower types. Data loading is performed using the tf.data.Dataset API. First, let us get to know the API. Hands-on. Please open the following notebook, execute the cells (Shift-ENTER) and follow the instructions wherever you see a "WORK REQUIRED" label. Fun with tf.data.Dataset (playground).ipynb

A Gentle Introduction to the tensorflow.data API - Machine Learning Mastery Yet another way of providing data is to use tf.data dataset. In this tutorial, you will see how you can use the tf.data dataset for a Keras model. After finishing this tutorial, you will learn: How to create and use the tf.data dataset; The benefit of doing so compared to a generator function; Let's get started.

How to implement tf.data.Dataset.window into_get_serve_tf_examples_fn ... def _input_fn (file_pattern, tf_transform_output, num_epochs = None, batch_size = BATCH_SIZE) -> tf. data. Dataset: '''Create batches of features and labels from TF Records Args: file_pattern - List of files or patterns of file paths containing Example records. tf_transform_output - transform output graph num_epochs - Integer specifying the number of times to read through the dataset.

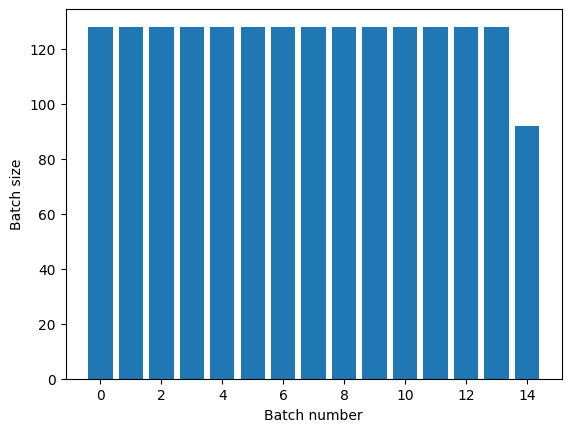

Text Classification with BERT Tokenizer and TF 2.0 in Python - Stack Abuse processed_dataset = tf.data.Dataset.from_generator ( lambda: sorted_reviews_labels, output_types= (tf.int32, tf.int32)) Finally, we can now pad our dataset for each batch. The batch size we are going to use is 32 which means that after processing 32 reviews, the weights of the neural network will be updated.

Post a Comment for "39 tf dataset get labels"